New types of therapeutics and how informatics help with their development.

Biopharmaceutical organizations are constantly developing new therapeutics. However, there has been a recent change in the definition of what constitutes a therapeutic. Historically, the vast majority of new medicines were, what is termed, small-molecule based. This means that the “entity” that delivers the effect can be constructed and synthesized in a laboratory using building block chemicals and synthetic chemistry. Many of the well-known medicines are of this kind—paracetamol/acetaminophen, aspirin, fluoxetine, etc.

This focus drove the development of many informatics tools and approaches that supported their discovery and development process and the underlying core business drivers:

- Innovation

- Speed to market

- Efficiency

Computer-based representations, registration systems, property calculators, analytical support tools, etc., all evolved to support the management of the scientific data, intellectual property, and the development process. One of the key drivers for the tools revolved around sharing of information to answer key questions like:

- Have we made this before?

- What are the agreed properties that we have defined as an organization?

- What does this molecule look like?

- Do we understand the structure-function relationships of changes on the molecule?

This long history means that the informatics tools are very mature. However, this is not the case for the new biologic-based therapeutics. There are a plethora of biology R&D organizations using novel science and technologies in the quest for new therapeutics including messenger RNA (mRNA), combination gene therapy, and antibody-drug conjugates.

All of these companies have a similar requirement when it comes to their informatics support—it is not small molecule chemistry based and the requirements are different to those seen in the small molecule world. Currently, there is not the same depth of mature tools in the biologics.

The biologics area needs informatics tools that cope with ambiguity, unknowns, large molecules, complex biology, and data types that are new. Their reporting and analysis needs to be flexible and able to expand and adapt as the science develops which, in the cases of the above science types, is very quickly. The vast majority of the large molecule therapeutics are synthesized by an organism—cell lines. These, by their very nature, are not as predictable and far more complex than a synthetic process used in small molecule development. This is compounded in the biologics production and is often continuous (not batch centric).

So, the major areas of informatics support fall into the same buckets as small molecule discovery and development, but the detailed requirements and science are different. The areas of required support to help the biopharma organizations with innovation, speed to market, and efficiency are:

- Biomolecule structure and representation: Providing formats and method to support duplicate checking, IP protection, and activity-structure analysis. These deliver reduced replication of work, greater potential of repurposing of known molecules, and greater protection of intellectual property.

- Scientific tools and data management: Molecular, cell biology, mechanism of action along with highly dimensional testing and analysis—HCS, image-based assays, time-based assay support. This helps with the speed and quality of decisions made during the development process by ensuring that the right people get the right information at the right time, thereby helping with speed to market.

- Process development, scale up, and manufacturing: Support in cell line development, process development, fermentation, and purification optimization coupled with analytical testing support—real time, at line, and off line testing and automation. This is all about efficiency and ensuing that the shortest and direct path is found to ensure the product is of the required quality and its behavior is understood as the process variables change during manufacture (foundations of QbD).

Some notable areas in scientific tools and data management are emerging or have evolved very quickly to meet demand. But, there still are common problems that need to be solved.

Biomolecule Structure Representation Support

This has evolved over time—protein/DNA sequence formats have existed for many years (FASTA, SWISSPROT) and structural details have been supported by PDB (Protein Databank). However, these do not provide a good method for exchanging and comparing biological entities. They do not contain enough information, and they do not support non-natural peptides and nucleic acids, post translational modification, or hybrid molecules made from other building blocks like polymers linkers and small molecules.

Hierarchical Editing Language for Macromolecules (HELM) has been developed to solve the first and perhaps most fundamental problem: how these complex entities are represented in a consistent and non-information loss fashion. It can deal with all the subtleties of biologics that arise, such as post-translational modifications, cross linking of domains, linkers, and ambiguity. It allows molecules to be defined, stored, computationally compared, and shared in a standard manner.

Core to the standard is also the evolution of tools to support the drawing of the biomolecules. The acceptance of the HELM standard has seen some commercial products emerge that provide far good user experience and functional capabilities.

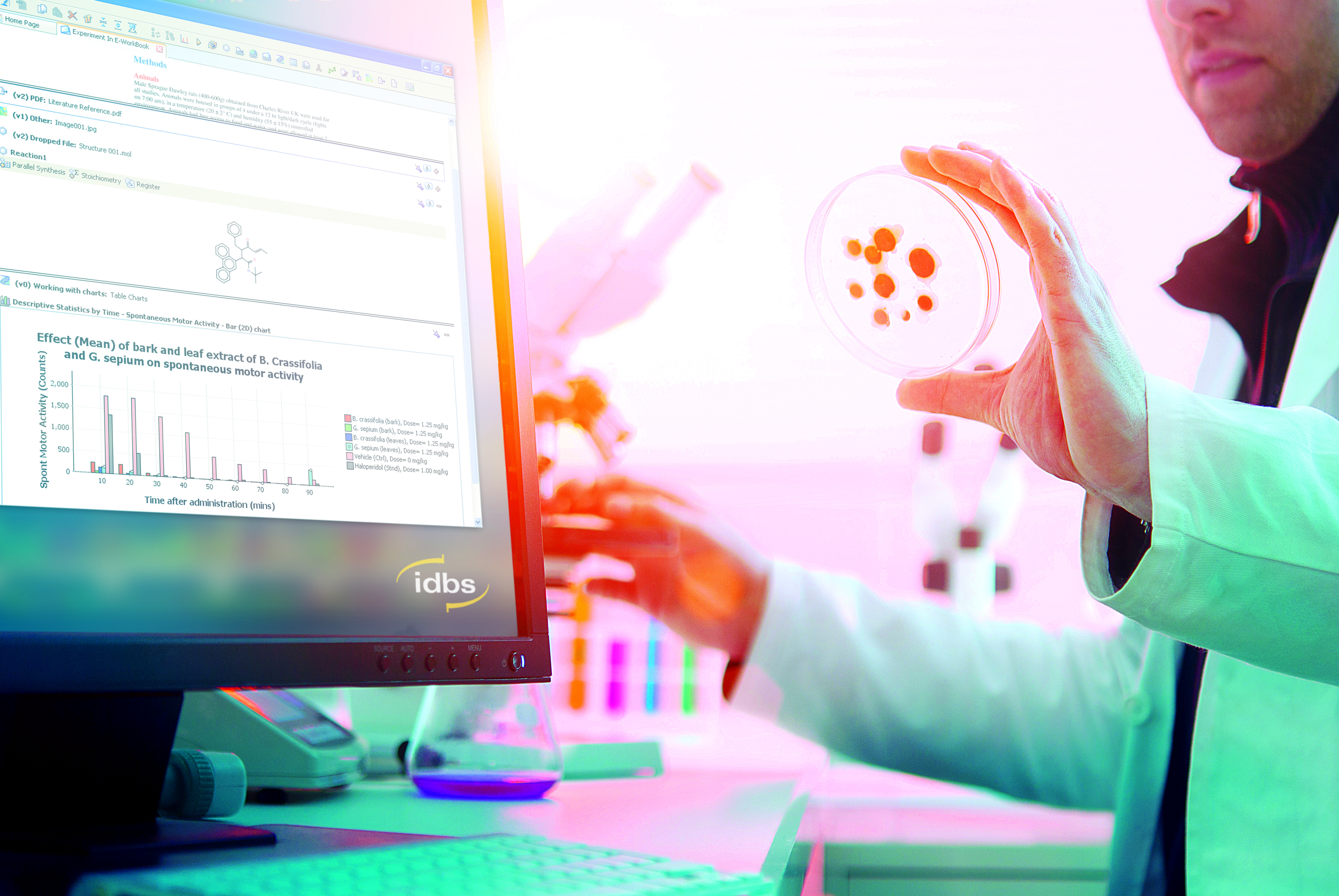

Scientific Tools and Data Management

Although scientific tools and data management cannot be described in its entirely in a single article, there are some notable areas that are emerging (or that have evolved very quickly to meet the demand). However, there are a few common problems that need to be solved, such as how to reference and link the entities that go into producing a biomolecule since the biological systems that produce the biomolecule of interest are built up over a set of steps—each one adding a critical element/component.

When drawn on a piece of paper, the high level process of developing a biologic is not complex, but the details and how it is controlled and managed are extremely complex. The devil is in the detail. It involves many different groups, information transfer between groups, decision gating, iterative processes, and reporting. The importance of good data management is obvious: without it, the complexities of the process can quickly become overwhelming and introduce points of risk and failure. Proper capture and management of the data can reduce these risks and eliminate points of failure completely if done correctly.

The data required to make decisions and to support the overall process is not documented or unstructured data. The structured data is what drives process, delivers quality, and helps ensure compliance. For a question such as “What is the concentration of an additive in the media?” structured data is needed—2.5 mg/ml—not a document or phrase. Furthermore, data needs to be stored in a manner that can be aggregated with other data so it can be plotted, charted, and analyzed scientifically.

Process Development, Scale Up, and Manufacturing Support

This is a massively complex problem, as the thousands of variables can potentially impact important product properties such as stability, purity, efficacy, structure, etc. Currently, many bioprocess organizations leverage a combination of tools to help track workflow, integrate the instrumentation and fermenters in real time, aggregate data across runs, and conduct statistical analysis on results. The combination of these tools, coupled with the structured data management, is critical. They deliver the benefits of greater transparency and quality of data coupled with speedier and better decision making.

These are not the only benefits seen when these technologies are leveraged. Speed and efficiency in the laboratory can also be dramatically impacted by reducing time spent doing manual transcriptions and manipulations of data. Furthermore, the handoff between process development/tech ops and manufacturing can be streamlined—where a development process report can be delivered with considerably more context and additional information about how the process was developed and what was tested. This gives manufacturing far greater insight into the proposed process and can reduce the amount of time spent “repeating” experiments to elaborate the impact of certain variables on product characteristics.

Manufacturing is well supported with technology and informatics systems, with PAT, MES, and LIMS taking the majority of the workload. These systems ensure that the process is followed and to highlight where deviations occur and how they are managed. The benefits of these informatics systems are obvious in this area and are focused around regulatory compliance and quality—two major drivers for any product organization.

Conclusion

The field is moving rapidly and most acknowledge that we will likely see a renaissance of the biopharma industry due to the application of biologics in treating disease. Despite the overwhelming ground swell and poster child examples of success, like Herceptin, the fundamental drivers in the biopharma businesses are the same. Innovation, speed to market, and efficiency all play a part in how biologics are developed and brought to market. With the competition increasing exponentially in the space, organizations will need to embrace informatics to keep meeting their objectives. The foundational pieces (such as data management, process support, etc.) are only being used in some areas by select companies. Gaps and opportunities exist for the application of existing informatics technologies, but it also requires the development of new technology to support both the rapidly evolving science and the evolving business aspects.